This hands-on guide shows how to monitor a Kubernetes cluster with Datadog using a local Minikube and the OpenTelemetry Demo as a realistic microservices showcase. You’ll route telemetry to Datadog via the Datadog Agent’s OTLP endpoint, then use Kubernetes Overview/Explorer, APM, and Error Tracking to troubleshoot common workload failures. By the end, you’ll deploy fast, see metrics/logs/traces in one place, and turn fixes into actionable monitors.

Monitoring Kubernetes is Tricky

Kubernetes is dynamic and noisy, a single mis‑typed resource or env var can strand replicas in Pending or flip them into CrashLoopBackOff. Host‑centric monitoring misses the picture so you need cross‑signal visibility tied to Kubernetes context.

What you actually need:

- Cluster & workload state – Deployments/ReplicaSets/Pods, conditions and Events to explain why something isn’t scheduling

- Golden signals – latency, errors, traffic, and saturation

- End‑to‑end traces – tie user symptoms to service bottlenecks

- Fast pivots between infra → workload → services → code → logs

Why Datadog for Monitoring Kubernetes

Datadog gives you a Kubernetes-native, cross-signal view – metrics, logs, traces, and events all in one place with the cluster context like nodes, namespaces, deployments and pods baked in. You can pivot from infrastructure → workload → service → code in a couple of clicks, correlate symptoms with changes, and drive down Mean Time To Repair (MTTR) without bouncing between tools.

Kubernetes Overview & Explorer — Live inventory of clusters, nodes, namespaces, Deployments, and Pods with status, Events, and quick pivots to YAML, logs, and traces.

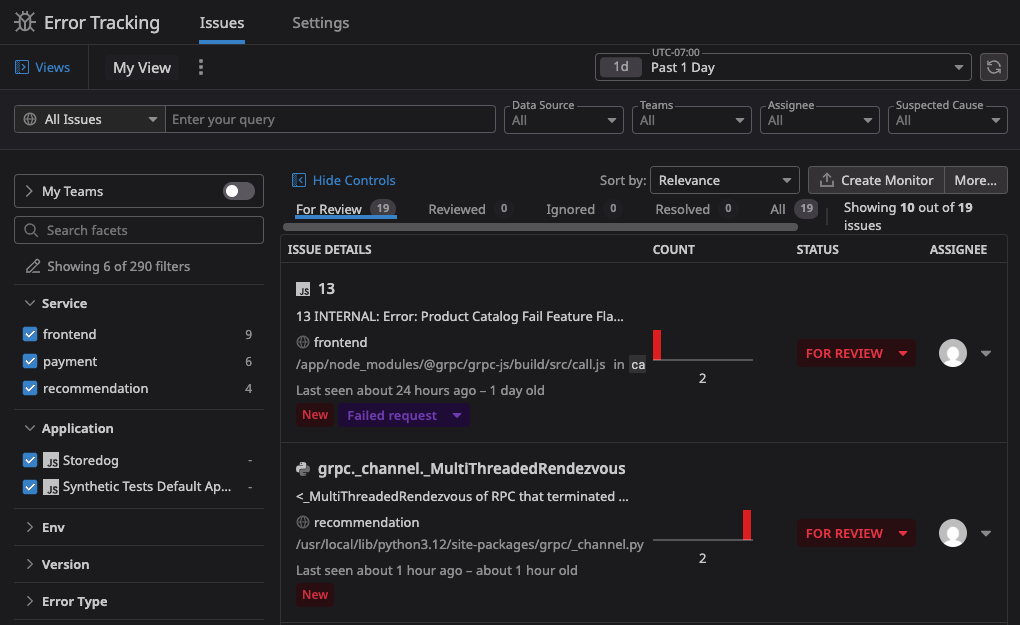

APM + Error Tracking — Group exceptions by signature, tie spikes to releases and services, jump straight into traces and span‑based metrics to see where time is spent.

OpenTelemetry‑first — Send OTLP directly to the Datadog Agent; keep vendor‑neutral instrumentation and your existing OTel SDKs.

Out‑of‑the‑box Kubernetes signals — KSM Core + Orchestrator Explorer power ready‑made dashboards and monitor templates for crash loops, pending pods, throttling, node pressure, and more.

What is OpenTelemetry

OpenTelemetry (OTel) is the open standard for app telemetry (traces, metrics, logs) with portable SDKs and the OTLP wire protocol. Instrument once, keep your options open, and forward wherever you need.

Two common wiring patterns to Datadog

- Approach #1 — OTel Collector → Datadog

The OTel Demo ships with an embedded Collector. Point it at Datadog using the Datadog exporter + extension.

Pros: powerful pipelines/processors, easy fan‑out to multiple backends. - Approach #2 — Datadog Agent (OTLP ingest)

Deploy the Datadog Operator and have services send OTLP directly to the Datadog Agent on 4317/4318.

Pros: fewer moving parts, instant Kubernetes inventory via Kube State Metrics Core + Orchestrator Explorer, one in-cluster Service to target.

Why I pick the Datadog Agent approach (#2)

- Production‑like: most teams already run the Datadog Agent, pointing OTLP at it is a small, low-risk change.

- OTel‑first: you instrument your code with OpenTelemetry SDKs, keeping vendor-neutral instrumentation while still leveraging Datadog’s platform features.

Prerequisites

- macOS/Linux shell

- Docker desktop, minikube, kubectl, Helm

- Datadog account + Datadog API key

I am running a minikube with Docker driver, with 8 CPU cores and 8 GB of RAM allocated to the cluster:

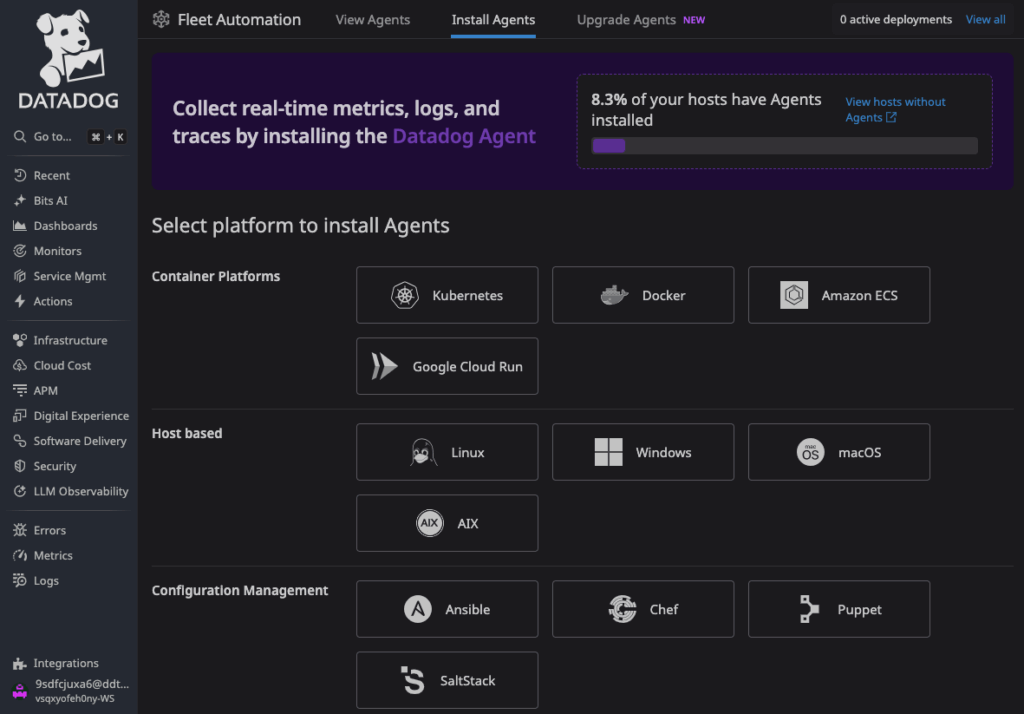

minikube start --driver=docker --memory=8192 --cpus=8Install the Datadog Operator & Agent (OTLP ingest)

I’ll deploy the Datadog Operator and a DatadogAgent CR that exposes the Agent’s OTLP receivers (4317/4318). Then we’ll point the OTel Demo services directly at the Agent.

1) Create a secret to store Datadog API key and site

kubectl create ns datadog-operator

kubectl -n datadog-operator create secret generic datadog-secret \

--from-literal="DD_API_KEY=<YOUR_DD_API_KEY>" \

--from-literal="DD_SITE_PARAMETER=datadoghq.com"2) Install the Datadog Operator

helm repo add datadog https://helm.datadoghq.com

helm repo update

helm upgrade --install datadog-operator datadog/datadog-operator -n datadog-operator3) Configure Datadog Agent via custom resource

Create a file named datadog-agent-minikube.yaml with the following content to configure Datadog Agent:

- Global config: sets the cluster name (

minikube) and site (datadoghq.com), and pulls the API key from thedatadog-secret(DD_API_KEY). otelCollector.enabled: true— turns on the Agent’s OTLP receivers (4317/4318) so our OTel Demo app can send traces/metrics/logs directly to the Agent.

kind: "DatadogAgent"

apiVersion: "datadoghq.com/v2alpha1"

metadata:

name: "datadog"

namespace: datadog-operator

spec:

global:

site: "datadoghq.com"

credentials:

apiSecret:

secretName: "datadog-secret"

keyName: "DD_API_KEY"

tags:

- "env:minikube"

kubelet:

tlsVerify: false

clusterName: "minikube"

features:

logCollection:

enabled: true

containerCollectAll: true

otelCollector:

enabled: true

kubeStateMetricsCore:

enabled: true

orchestratorExplorer:

enabled: true

After Datadog Operator spins up the Agent, our OTel Demo services can point to it to stream telemetry to Datadog. Apply the change:

kubectl apply -f datadog-agent-minikube.yaml

kubectl -n datadog-operator get svc datadog-agent -o wide

# Expect ports 4317 (grpc) and 4318 (http)Configure & Deploy the OTel Demo

We’ll stand up the OpenTelemetry Demo on Kubernetes so you have a realistic microservices playground. We’ll modify the deployment with a custom values file that wire telemetry to Datadog via the Datadog Agent OTLP ingest endpoint.

1) Create a namespace and add the Helm repo

kubectl create ns otel-dd

helm repo add open-telemetry https://open-telemetry.github.io/opentelemetry-helm-charts

helm repo update2) Configure the OTel demo (lightweight footprint)

This patches app services only (not infra like Kafka/Redis/loadgen) and points them to Datadog Agent’s in‑cluster OTLP gRPC endpoint. It also disables built‑ins (i.e. Grafana, Jaeger, Prometheus, Opensearch) we won’t use in this datadog approach.

Create a file named values-otlp-dd-subset.yaml with the following content:

# Keep the demo small

jaeger: { enabled: false }

prometheus: { enabled: false }

grafana: { enabled: false }

opensearch: { enabled: false }

_globals:

otlpEnv: &otlpEnv

- name: OTEL_EXPORTER_OTLP_ENDPOINT

value: "http://datadog-agent.datadog-operator.svc.cluster.local:4317"

- name: OTEL_EXPORTER_OTLP_PROTOCOL

value: "grpc"

- name: OTEL_TRACES_EXPORTER

value: "otlp"

- name: OTEL_METRICS_EXPORTER

value: "otlp"

- name: OTEL_LOGS_EXPORTER

value: "otlp"

adservice: { envOverrides: *otlpEnv }

cartservice: { envOverrides: *otlpEnv }

checkoutservice: { envOverrides: *otlpEnv }

currencyservice: { envOverrides: *otlpEnv }

emailservice: { envOverrides: *otlpEnv }

frontend: { envOverrides: *otlpEnv }

frontendproxy: { envOverrides: *otlpEnv }

imageprovider: { envOverrides: *otlpEnv }

paymentservice: { envOverrides: *otlpEnv }

productcatalogservice: { envOverrides: *otlpEnv }

quoteservice: { envOverrides: *otlpEnv }

recommendationservice: { envOverrides: *otlpEnv }

shippingservice: { envOverrides: *otlpEnv }

# keep loadgen off by default

loadgenerator:

replicaCount: 03) Install the OTel demo with the values file

helm upgrade --install shop-dd open-telemetry/opentelemetry-demo \

-n otel-dd -f values-otlp-dd-subset.yaml3) Access the Otel Astronomy Shop UI & tools

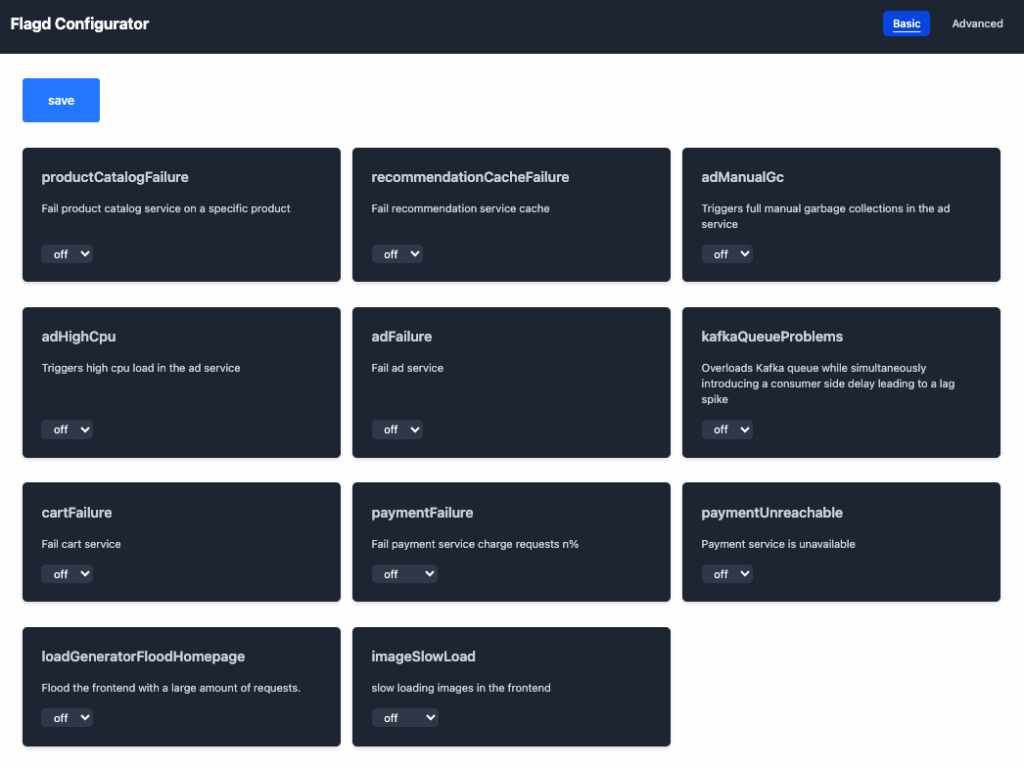

kubectl -n otel-dd port-forward svc/frontend-proxy 8080:8080- Astronomy Shop UI: http://localhost:8080/

- Feature Flags UI: http://localhost:8080/feature/

Tip: pause the load generator to keep a small footprint for our local setup, we can manually browse the webstore and generate traffic.

kubectl -n otel-dd scale deploy load-generator --replicas=0Explore OpenTelemetry Data in Datadog

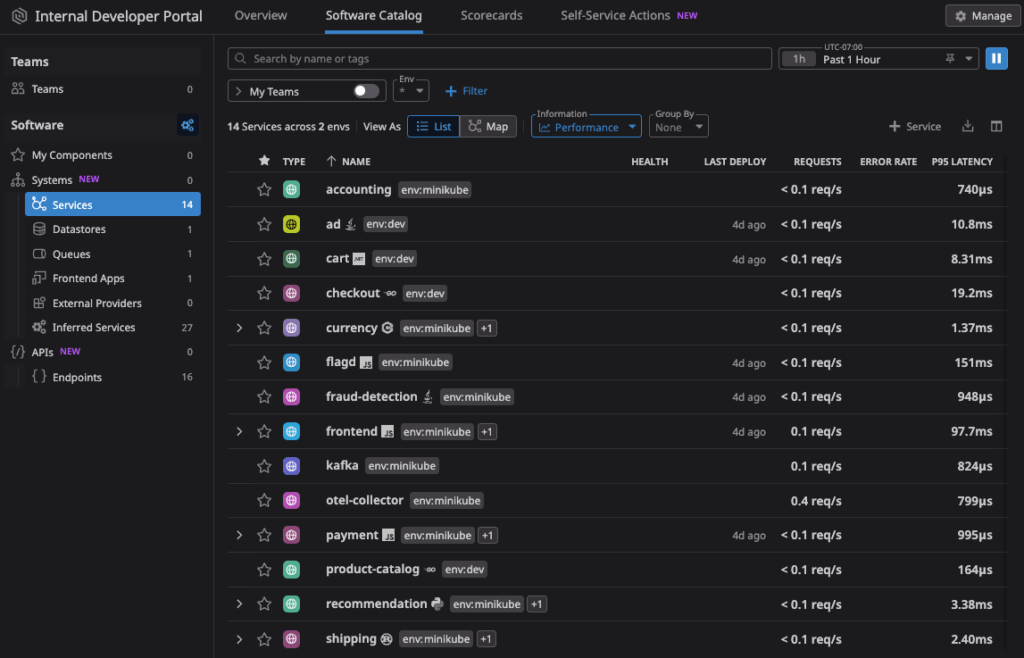

Open APM > Software Catalog to view all running services of the OTel demo

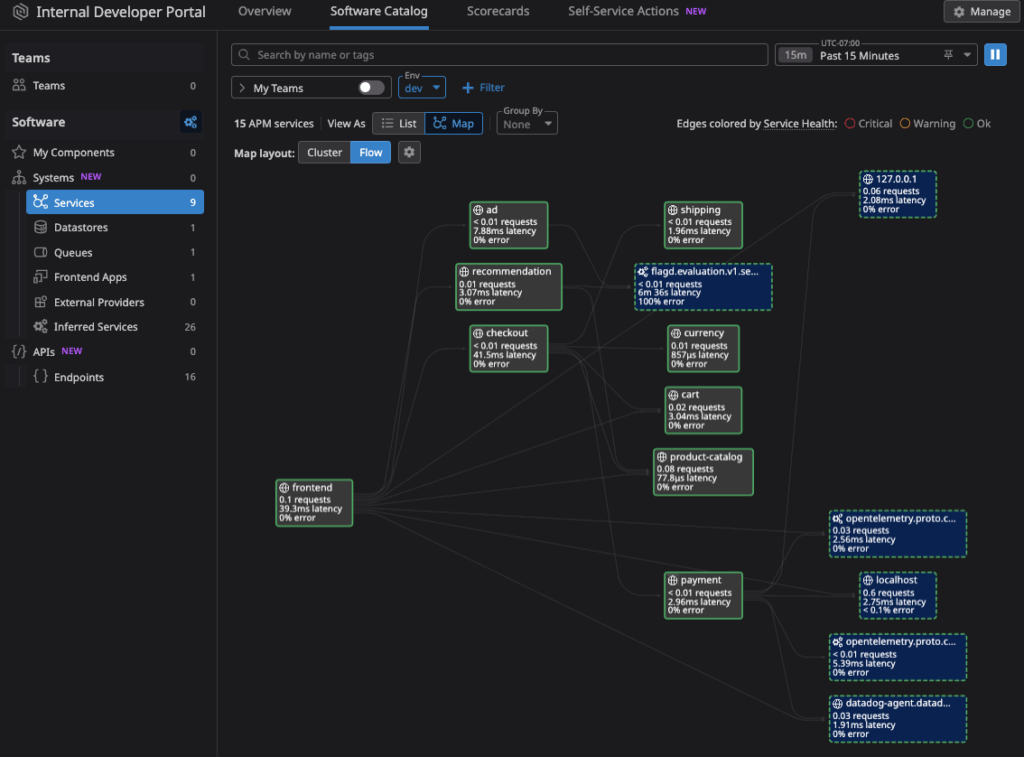

Switch to Map view and change Map layout to Flow to see the service topology and dependencies

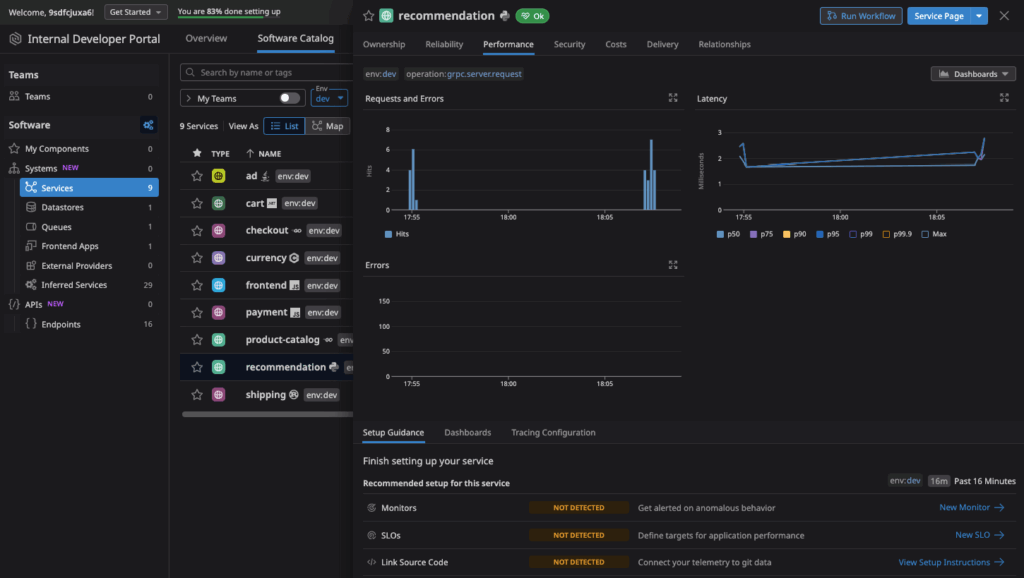

Select a service (e.g. recommendation) to view its performance metrics (like requests throughput, latency, errors) in a side panel without leaving the page

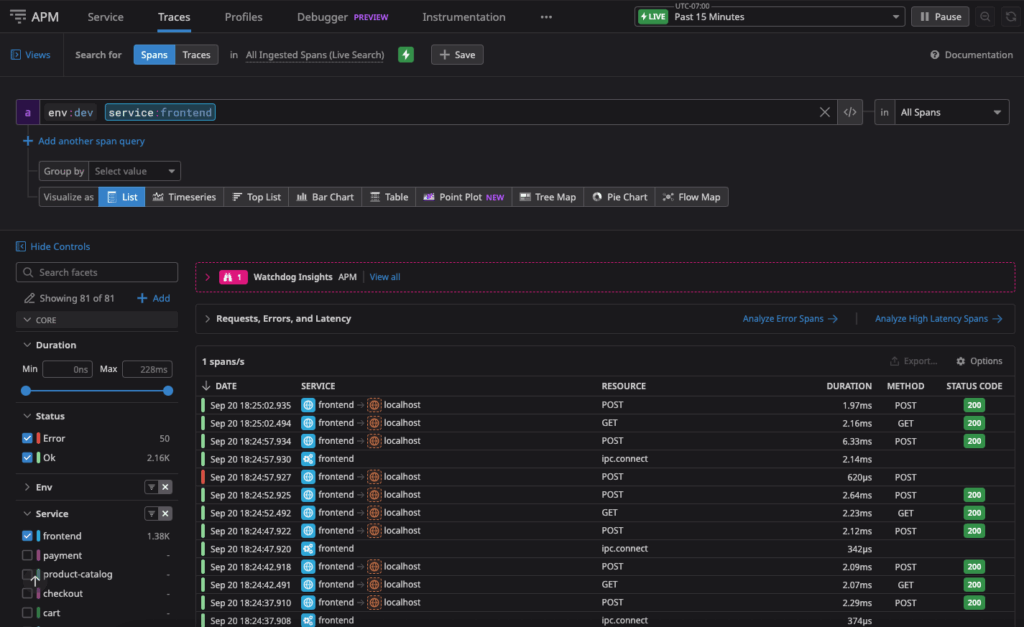

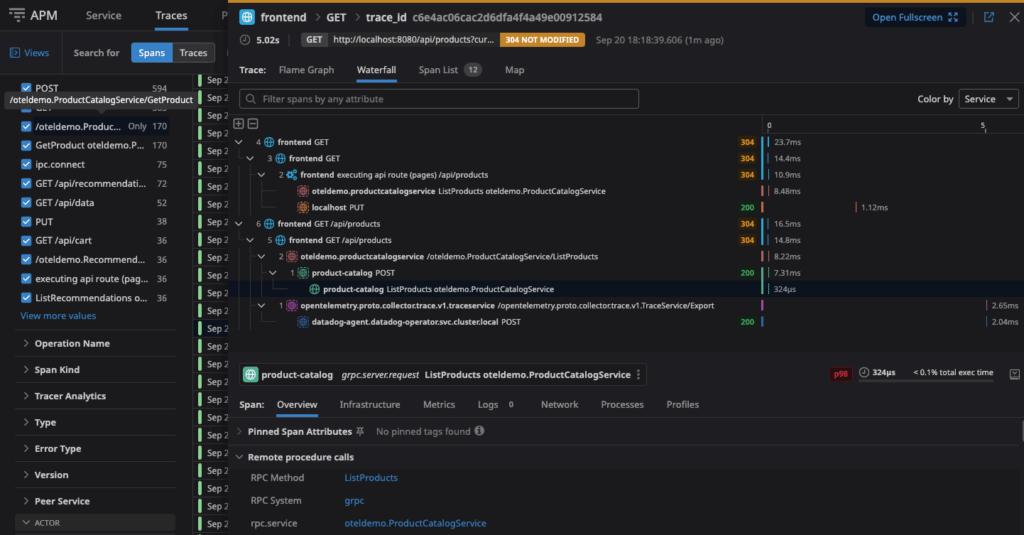

Open APM > Traces and filter by the frontend service (the Astronomy shop UI)

Open a trace and select a span of interest to view the end-to-end trace details. The waterfall timeline shows each hop the request traverses and how much time is spent on each, which makes it ideal for troubleshooting performance issues (e.g., slow services, chatty calls, downstream errors).

Troubleshooting Workloads

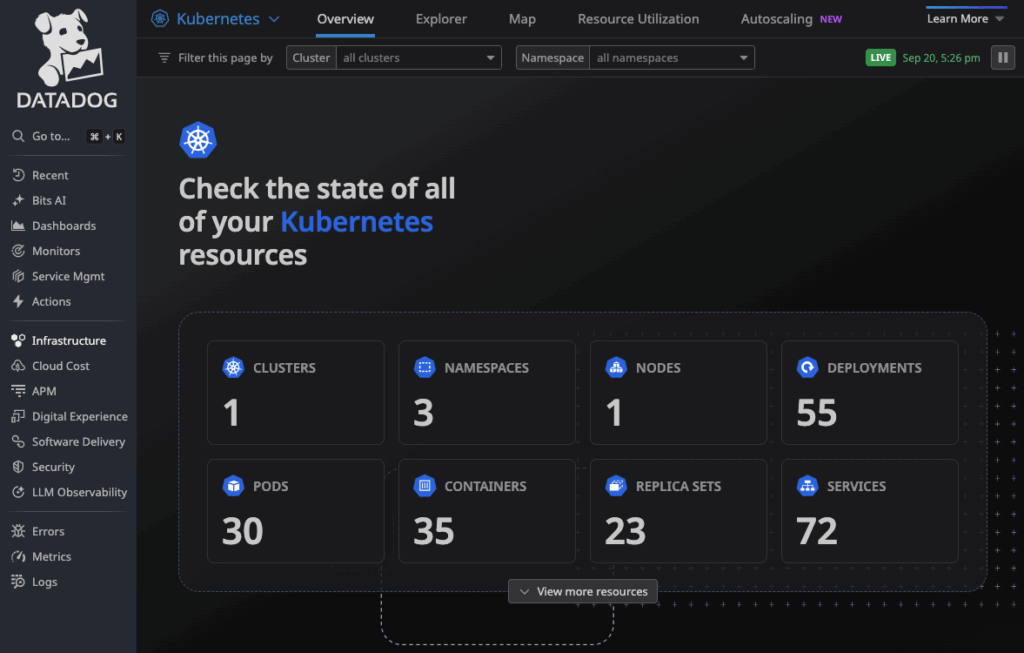

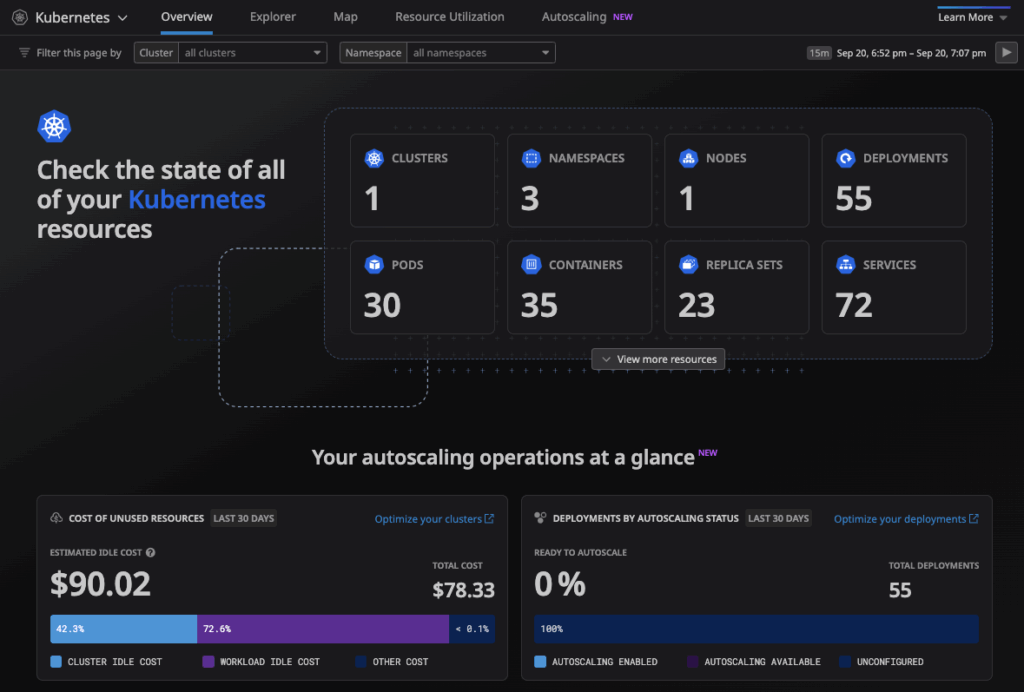

Datadog provides lots of tools to help you monitor and troubleshoot workloads running in Kubernetes clusters. The Kubernetes Overview provides a high-level view of your cluster’s health and performance. It’s a great starting point for troubleshooting, as it shows the summary status of your cluster’s nodes, and pods.

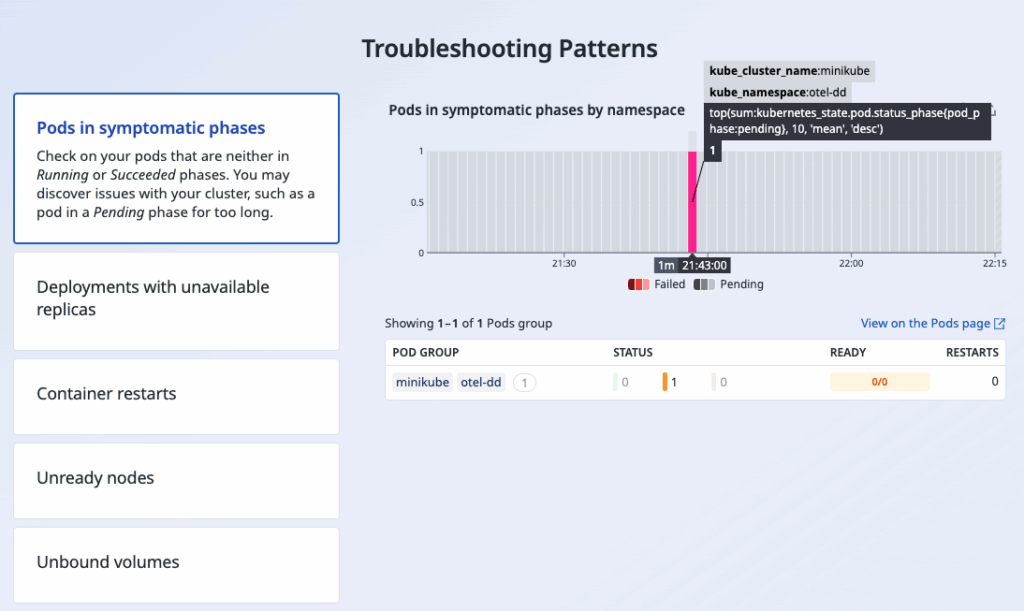

Look at the Troubleshooting Patterns section and hover over Pods in symptomatic phases graph, there is one pod that is stuck in pending state.

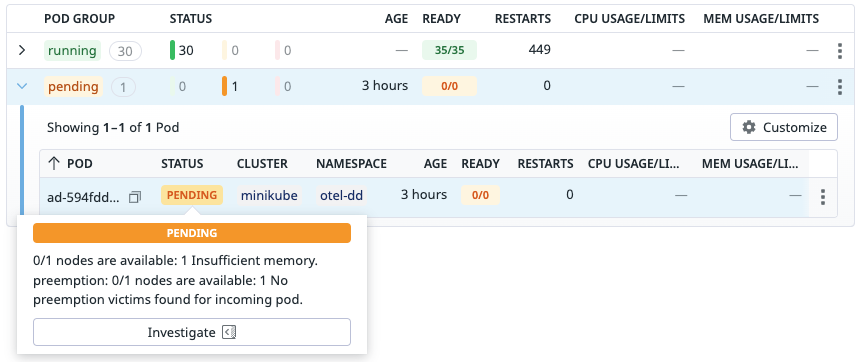

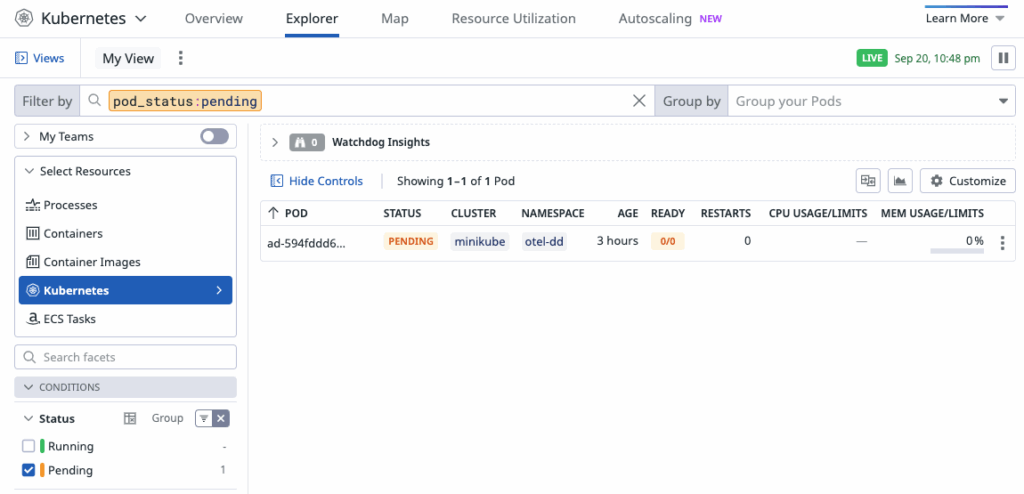

Navigate to Kubernetes Explorer, click on Pods and see the Pending Pod Group, you can tell that there’s an issue with the ad service. Hover over the PENDING status to see why the pod is stuck – Insufficient memory.

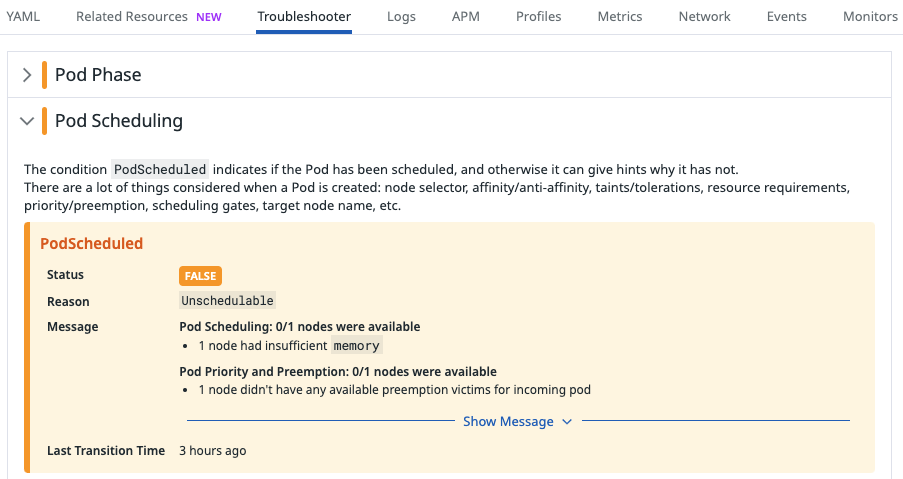

Click Investigate to open the pod’s Troubleshooter tab. It gives you more comprehensive explanation of the scheduling problem. In this case our minikube cluster needs more memory resources to run the advertisement service.

With the ad service down, the webstore’s ad banner renders blank.

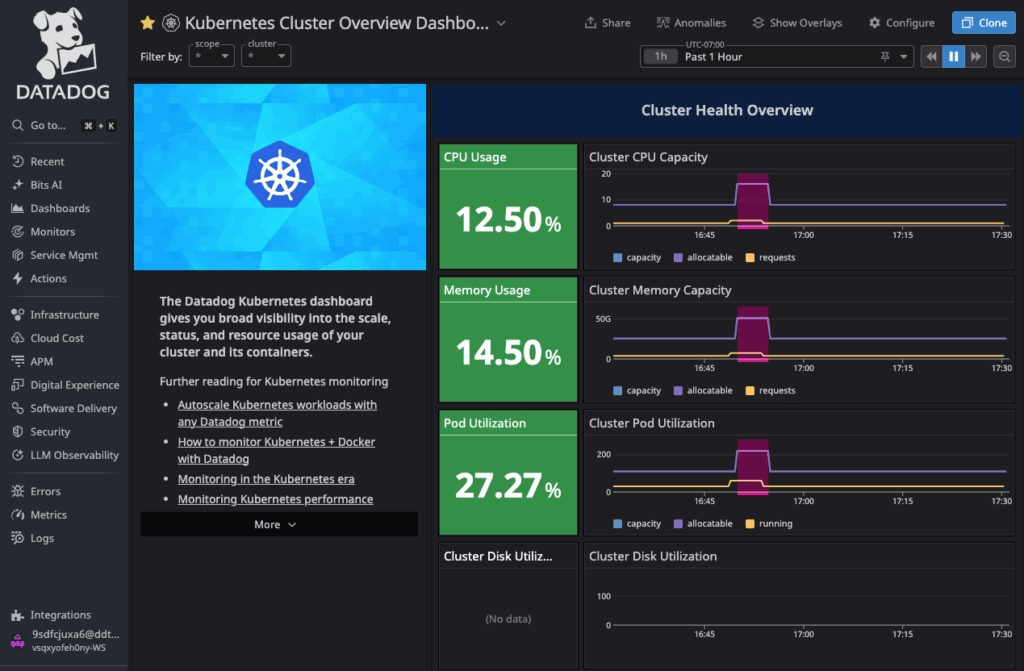

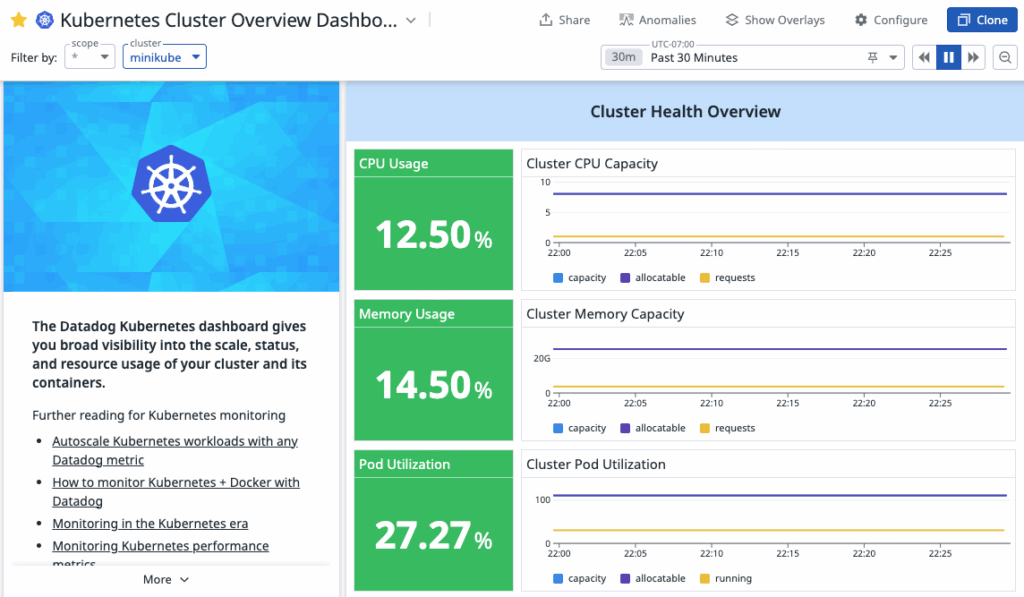

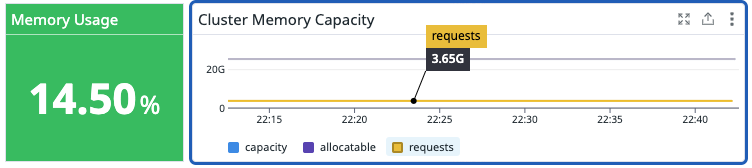

Let’s open the Kubernetes Cluster Overview Dashboard to confirm whether the cluster has enough memory resources to run the workload.

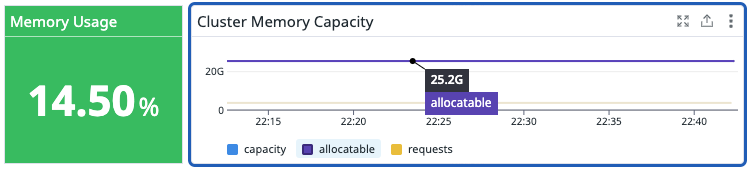

Hover over the allocatable line in Cluster Memory Capacity graph, you can see that the cluster has 25.2G memory available but only 3.65G memory was being requested. So there should be enough capacity for the ad service.

Our cluster is healthy and has enough memory to run the ad service. Why it is reported that the node has insufficient memory? Let’s look at the pod’s spec to confirm that it’s requesting a reasonable amount of memory. Navigate to Kubernetes Explorer > Pod, use the facets panel on the left click Pending in Status to show only resources in pending state.

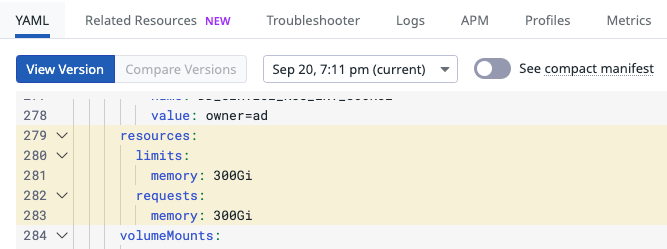

Click on the pending pod (i.e. ad-594fddd6.) to see the details. Navigate to the YAML tab to inspect its metadata and spec. When we scroll down to the resources section, we can see that the pod is configured to request 300Gi memory, which far exceeds the allocatable memory in our cluster, and clearly this is a typo.

To fix the issue, we can modify the manifest for the ad workload by changing both memory requests and limits to the correct settings (i.e. 300Mi)

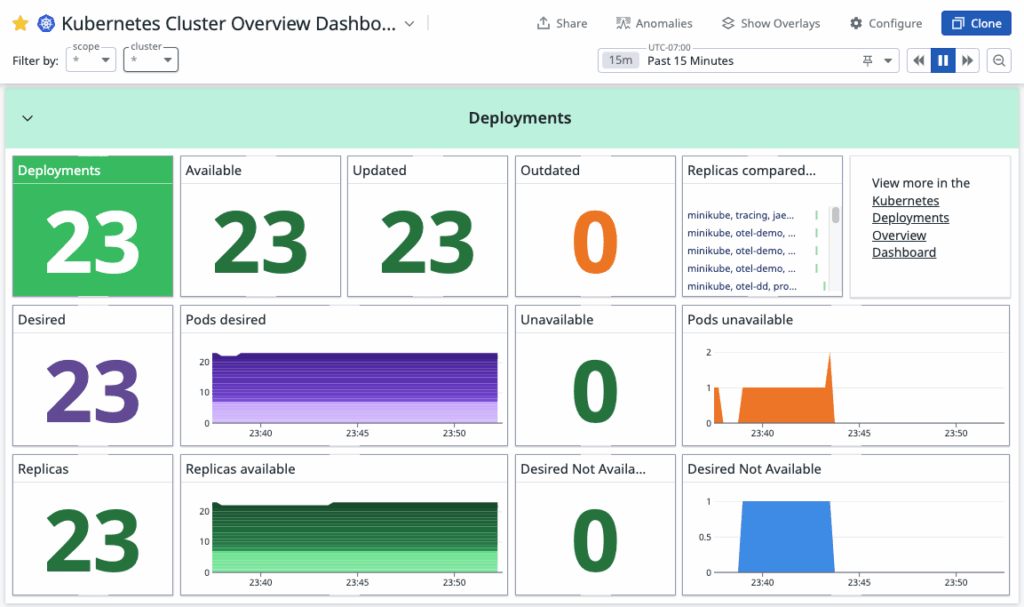

kubectl set resources deploy/ad --requests=memory=300Mi --limits=memory=300Mi -n otel-ddReturn to the Kubernetes Cluster Overview Dashboard, under the Deployment section we can confirm the issue is fixed and all pods are up and running because the number of Desired pods equal to that of Available (i.e. 23)

The ad banner is back online and displaying again!

Create a Monitor to stay alert

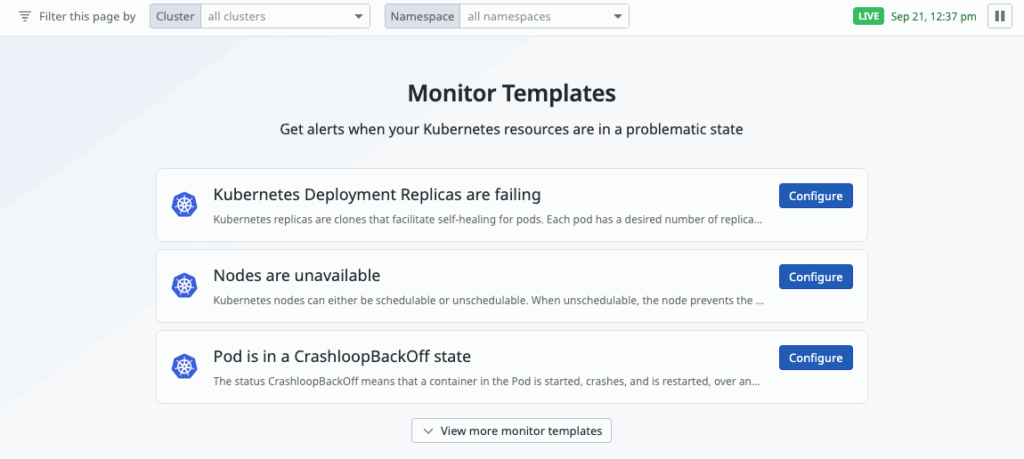

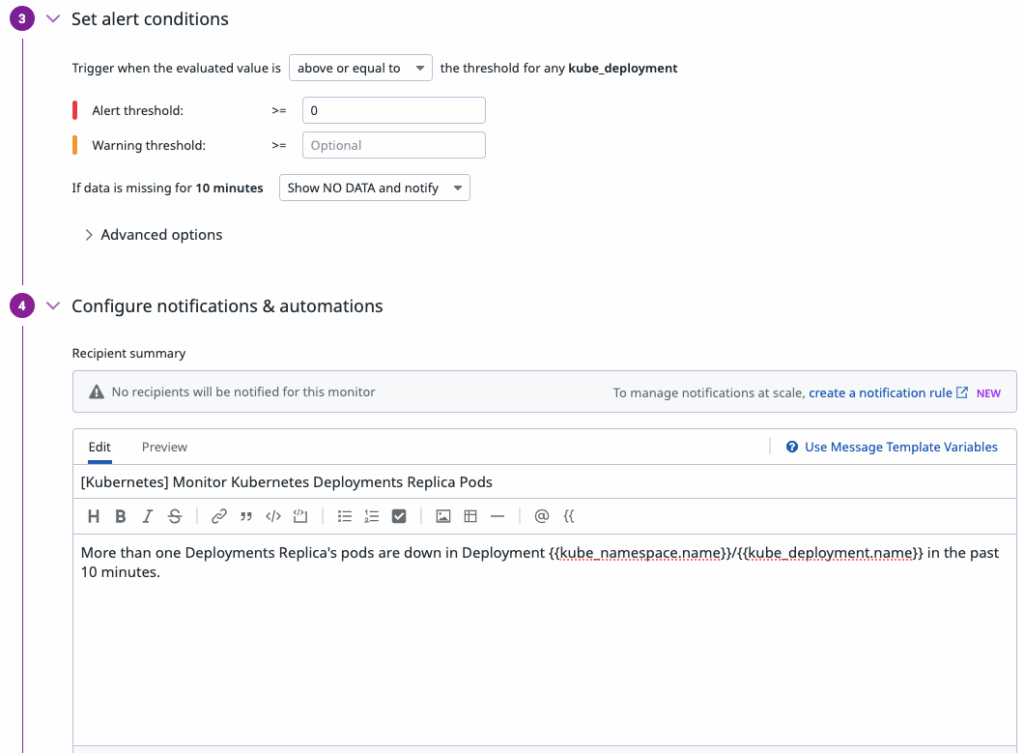

Let’s create a monitor and get alerts if similar issues recur to help us fix them more quickly. Datadog provides many built-in monitoring templates. Navigate to Kubernetes Overview > Monitors Templates section:

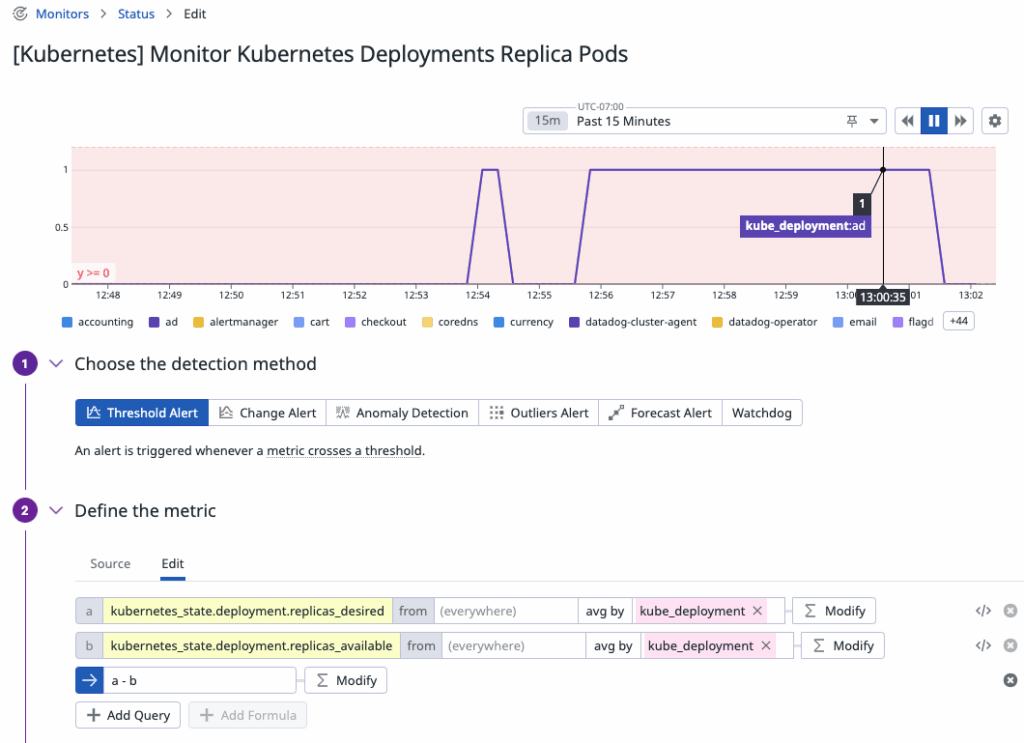

Locate Kubernetes Deployment Replicas are failing monitor and click Configure. This opens a pre-configured metric monitor that track pods in pending state.

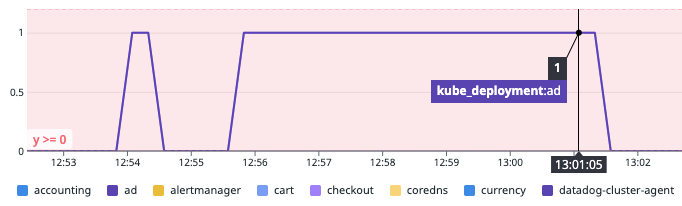

Under Define the metric, the metric query for this monitor is the difference between the number of deployment replicas desired vs number available. You can see from the graph that the kube_deployment:ad pod was at 1 (i.e. when the pod was stuck at pending state) before and now it has returned to 0.

This is a useful metric to track for any pod. We can also configure the alert to send out notification via email, text, or Slack to the right team members so they can look at the issue right away when the alert is triggered.

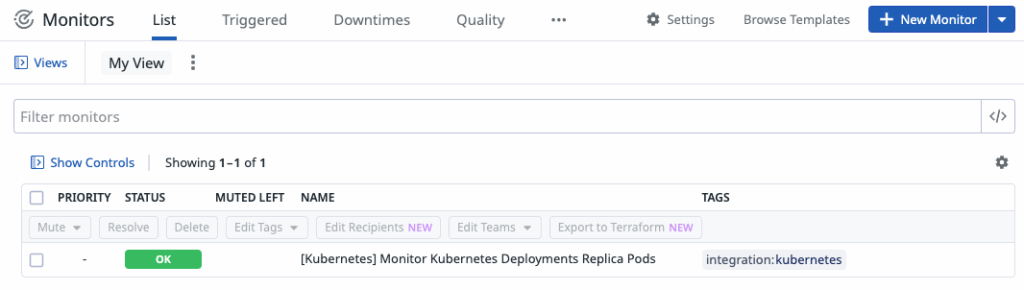

Navigate to the Monitors page and you can see your new monitor as a list:

Now you’ve got guardrails in place. If a similar issue pops up, your team will hear about it fast.

To summarize, in this troubleshooting scenario we:

- Used Kubernetes Overview and Kubernetes Explorer to identify a pod stuck in

Pendingdue to memory constraints. - Verified overall capacity in the Kubernetes Cluster Overview dashboard.

- Inspected the Pod spec in Kubernetes Explorer and found a typo in the memory request.

- Fixed the manifest and redeployed the ad service, restoring the banner.

- Created a Monitor so any future unschedulable Pods trigger an alert.

Thanks you reading and I hope this walkthrough was useful.